Today´s discussion

Understanding AI Governance - Part 1

Navigating the Future of Artificial Intelligence

What happens when the world's most powerful technology meets its greatest regulatory challenge ? As artificial intelligence reshapes our world, one question looms larger than ever: How do we ensure AI benefits humanity while minimizing its risks ?

The Building Blocks of AI Systems: A Foundation for Governance

At its core, an AI system is far more complex than most people realize. Like a sophisticated orchestra, it requires multiple components working in perfect harmony to create its output. The EU AI Act defines these systems as "machine-based systems designed to operate autonomously, exhibiting adaptiveness after deployment."

Core Components and Their Interaction

Modern AI systems comprise four essential elements:

- Data: The lifeblood that trains and operates the system

- Models: The cognitive engine that processes information

- Output: The system's decisions, predictions, or recommendations

- Context: The environment in which the system operates

These components are indicated in the OECD's Framework for the Classification of AI systems. Instead, they form an intricate web of interactions, much like a digital ecosystem where multiple AI models communicate and collaborate to achieve broader objectives.

The Global Landscape of AI Governance

A Framework Built on Multiple Pillars

The governance of AI isn't just about regulations. It's about creating a comprehensive framework that ensures responsible innovation.

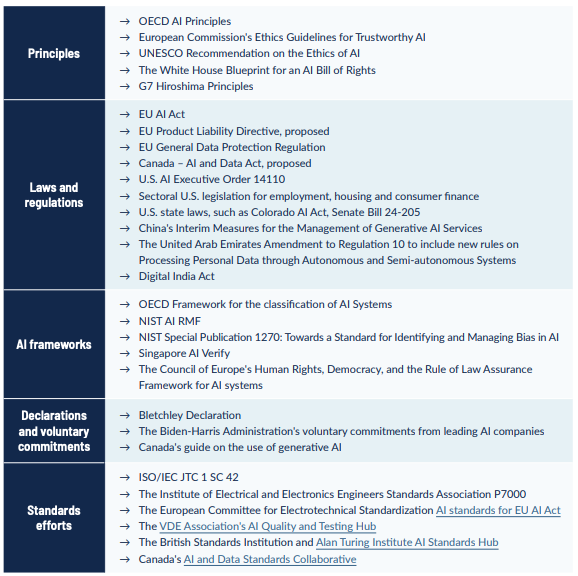

Law and policymakers have crafted an extensive ecosystem of AI governance materials : from foundational principles to detailed technical standards. This complex tapestry of regulatory guidance includes principles, laws, policies, frameworks, declarations, voluntary commitments, standards and emerging best practices.

The interconnected nature of these resources creates both opportunities and challenges, as each element influences and shapes the others. For instance, international principles often inform national legislation, while technical standards help operationalize legal requirements. This dynamic interplay makes navigation complex but also ensures a comprehensive approach to AI governance.

Just to show how much attention this topic is getting : a research group called Algorithm Watch found that there are 167 different sets of AI principles out there. That's a lot of smart people thinking about how to make sure AI develops in ways that benefit everyone. These guidelines help make sure that as AI becomes more powerful, it stays focused on helping people and society rather than causing problems.

- Example of AI Principles and Guidelines: there's a growing worldwide effort to set clear guidelines for how AI should be developed and used ethically. Two major organizations - the OECD's AI Principles and UNESCO's Recommendation on the Ethics of AI have created sets of principles that are becoming highly influential. While these guidelines aren't legally binding like laws, many countries are choosing to follow them when creating their own rules about AI.

Think of it like this: these principles work as helpful recommendations that countries can adapt to their own needs. They tackle important questions like "How do we make sure AI is fair?" or "How can we keep AI systems transparent?" Government officials, lawyers and tech companies then use these guidelines when they're figuring out how to handle AI in their own work.

- Example of Legal Frameworks: the EU AI Act stands as a pioneer in this space, introducing the world's first comprehensive AI regulation based on risk levels, setting a model that many other countries are following. Think of it like a traffic light system : some AI applications are completely forbidden (red light) because they're deemed too risky, while others require strict oversight (yellow light) or face lighter regulation (green light). For instance, AI systems that manipulate human behavior in harmful ways are banned, while AI used in critical infrastructure faces rigorous controls.

The risk-based approach, categorizing AI systems based on their potential impact:

-

-

- Unacceptable Risk: Systems that pose significant threats to society

- High Risk: Applications requiring strict oversight

- Limited Risk: Systems needing basic transparency

- Minimal Risk: Applications with minimal restrictions

-

-

Organizations developing or using AI need to understand their role and responsibilities under this framework. If you're providing high-risk AI systems (like those used in healthcare or law enforcement) you'll face the most stringent requirements. But even if you're just distributing or importing AI systems, you still have important obligations to meet. These requirements span across different levels: from how your company is structured to handle AI governance, to specific product documentation needs, to day-to-day operational protocols for risk management and performance monitoring.

- Beyond mandatory regulations, key voluntary frameworks have emerged to guide AI governance implementation. Notable examples include NIST's AI Risk Management Framework and ISO's AI Standards, which provide detailed, practical guidance for organizations. These voluntary initiatives serve multiple purposes: they help create consensus among diverse stakeholders about risk management approaches, establish common technical benchmarks, and offer concrete ways to demonstrate regulatory compliance. By following these frameworks, organizations can build robust governance systems that often exceed minimum legal requirements while fostering industry-wide best practices.

- Global AI governance often takes shape through international declarations and cross-border agreements, where nations publicly commit to shared principles and objectives. These diplomatic instruments, while not legally binding, carry significant political weight. When countries endorse these declarations, particularly at leadership levels, they signal their dedication to developing responsible AI governance frameworks and collaborating on shared challenges. Such commitments often catalyze domestic policy changes and international cooperation.

- you can also find AI laws, regulations, standards and frameworks. Understanding the specific risks their AI systems pose, and then developing comprehensive governance frameworks based on those risks. This risk-based methodology allows organizations to create adaptable governance structures that work effectively across different jurisdictional requirements and regulatory environments.

IAPP Global AI Law and Policy Tracker

This map shows the jurisdictions in focus and covered by the IAPP Global AI Law and Policy Tracker. It does not represent the extent to which jurisdictions around the world are active on AI governance legislation. Tracker last updated February 2024.

AI International Governance Landscape

Among the numerous initiatives shaping the AI governance landscape, several frameworks stand out as particularly influential and transformative (IAPP source) :

On the OECD´s National AI policies & strategies, you can find the comprehensive database tracking AI policy developments worldwide featuring over 1,000 initiatives across 69 nations and the European Union. You can filter the policies by country, type of regulation, or target audience to discover relevant governance frameworks.

Download our International AI Governance Regulations Landscape.

Expert Insights and Industry Perspectives

As Kate Jones, U.K. Digital Regulation Cooperation Forum CEO, notes: "With AI poised to revolutionise many aspects of our lives, fresh cooperative governance approaches are essential. Effective collaboration between regulatory portfolios, within nations as well as across borders, is crucial."

The Challenge of Implementation

Organizations face an immense task of navigating this complex regulatory landscape.

Andrew Gamino-Cheong, Trustible AI Co-founder, emphasizes: "AI governance is about to get a lot harder. The internal complexity of governing AI is growing as more internal teams adopt AI, new AI features are built, and the systems get complex."

Building a Robust Governance Framework

Success in AI governance requires:

- Risk Assessment: Understanding your organization's unique AI risk profile

- Cross-Border Compliance: Developing frameworks that work across jurisdictions

- Stakeholder Collaboration: Working with industry partners and regulators

The Path Forward

Denise Wong from Singapore's Infocomm Media Development Authority provides a vision for the future: "It is important to take an ecosystem approach to AI governance. Policy makers and industry need to work together... to create a trusted ecosystem that promotes maximal innovation."

The Future of AI Governance

As AI continues to evolve, organizations must take proactive steps to establish robust governance frameworks. Start by:

- Assessing your AI systems' risk levels

- Implementing monitoring and documentation protocols

- Engaging with industry standards and best practices

- Building cross-functional governance teams

- and surround yourself with AI Governance experts to stay updated on the newest regulations and processes that may apply to your business. Any doubts, contact us at hello@karine-boucher.com

The future of AI governance lies in balancing innovation with responsibility. By understanding and implementing proper governance frameworks today, organizations can ensure they're prepared for tomorrow's challenges.

Learn more about Legal and Regulatory Compliance. and read more about AI Governance.

If you wish to assess the AI Governance compliance of your company, just contact us.

Source: "AI Governance in Practice Report 2024" IAPP