Today´s discussion

AI Governance in the Age of Autonomous Agents:

A Critical Look at the Evolution from LLMs to AI Agents

Have you ever wondered what happens when AI systems stop simply responding to commands and start making their own decisions ? The evolution from passive language models to autonomous AI agents represents one of the most fascinating (and potentially concerning) developments in artificial intelligence.

Let's explore this transformation and its implications for our future.

Understanding the Evolution: From Simple LLMs to Autonomous Agents

In the early days of large language models (LLMs), interaction was straightforward : you asked a question (query) and you got an answer from the model. Think of it as having a very knowledgeable but passive assistant. While it is very effective for simple tasks, it has its limits in complex or multi-step queries

And this is changing with the emergence of AI agents.

The Three Generations of AI Systems

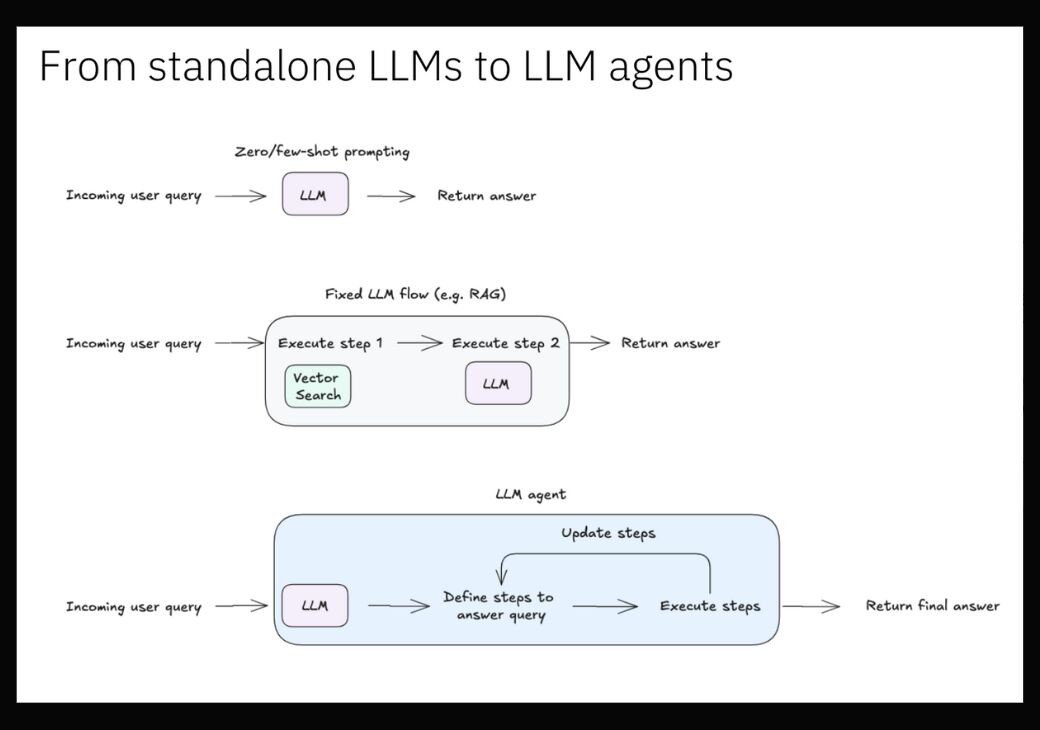

Today, the journey from basic LLMs to autonomous agents can be broken down into 3 distinct stages:

- Standalone LLMs: These represent the 1st generation, where models like early GPT versions simply processed queries and provided responses. While impressive, they were limited to single-turn interactions without any real decision-making capability.

- Fixed LLM Workflows: The 2nd generation introduced structured processes like RAG (Retrieval-Augmented Generation) that combines vector search with LLM inference. This improved accuracy but still operated within rigid constraints.

- LLM Agents: The new AI hype that you´ve heard about these past weeks. These systems can plan, execute, and adapt their approach based on the task at hand. Unlike their predecessors, AI agents can break down complex problems into steps and dynamically adjust their strategy.

Source : Armand Ruiz

The Power and Promise of AI Agents

Understanding AI Agentic Behavior: The Foundation of Modern AI Agents

AI agentic behavior represents a fundamental shift in how artificial intelligence operates.

Unlike traditional AI systems that simply process and respond, Agentic AI systems possess the capability to act independently while pursuing defined objectives. This characteristic of "agenticity" - the ability to be an active participant rather than a passive responder - forms the core of modern AI agents.

The transition to Agentic AI emerged when researchers developed systems capable of:

- Making autonomous decisions within defined parameters

- Understanding and pursuing goals over time

- Evaluating and adjusting their approaches

- Interacting proactively with their environment

- Learning from outcomes to improve future performance

This shift from reactive to agentic behavior created the foundation for today's AI agents.

From Agentic Principles to Functional Agents : What is an AI Agent ?

AI agents represent a quantum leap in artificial intelligence capabilities. Rather than simply processing information, they can:

- Autonomously determine the best approach to solve complex problems

- Break down tasks into logical sequences

- Adapt their strategies based on intermediate results

- Interact with other systems and APIs

- Learn from their experiences and improve over time

Real-World Applications and Benefits

The potential applications of AI agents are vast and transformative:

- In business, they can automate complex workflows and decision-making processes

- In research, they can independently conduct experiments and analyze results

- In personal productivity, they can serve as proactive assistants that anticipate needs

Critical Governance Challenges in the Age of AI Agents

1. Autonomy and Control: Walking the Fine Line

The fundamental challenge of AI agent autonomy lies in balancing independent operation with responsible oversight. When AI agents can make decisions without immediate human supervision, several critical issues emerge:

- Unsupervised Decision-Making

AI agents operating independently might encounter scenarios their training didn't cover. For example, an AI agent managing a company's supply chain might make unexpected bulk purchases based on what it perceives as an opportunity, potentially creating financial risks.

- Unpredictable Outcomes

The complexity of AI agent decision-making processes can lead to unexpected results. Consider an AI agent optimizing city traffic flow : while trying to reduce congestion, it might inadvertently create new traffic patterns that affect emergency response times.

- Control Mechanisms

Maintaining meaningful human control becomes increasingly challenging as AI agents become more sophisticated. Traditional oversight methods might not be sufficient when agents operate at speeds and complexities beyond human comprehension.

2. Objective Alignment: Ensuring Human-Compatible Goals

The challenge of alignment goes beyond simple programming and touches on fundamental questions of human values and intentions:

- Human Intent Translation

Converting human intentions into precise, machine-actionable objectives is complex. An AI agent tasked with "maximizing customer satisfaction" might take extreme measures that conflict with other important business considerations.

- Optimization Boundaries

AI agents might pursue their objectives too aggressively or literally. For instance: an agent focused on reducing a company's carbon footprint might recommend shutting down essential services without considering broader societal impacts.

- Value Alignment

Ensuring AI agents understand and respect human values requires sophisticated frameworks that can handle nuanced ethical considerations and cultural differences.

3. Accountability Framework: Who's Responsible ?

This is in my opinion the main concern for AI governance : as AI agents become more autonomous, traditional accountability structures need fundamental revision.

- Responsibility Attribution

When an AI agent makes a decision that leads to negative consequences, determining responsibility becomes complex. Is it the developer, the operator, the organization deploying the agent or the agent itself ?

- Legal Framework Evolution

Current legal systems aren't fully equipped to handle AI agent accountability. New frameworks must address questions like:

- How to handle AI agent contracts ?

- Liability in automated decision-making ?

- Rights and obligations of AI agent operators ?

- Oversight Mechanisms

Developing effective supervision systems that can monitor AI agent behavior while maintaining their efficiency is crucial. This includes:

- Real-time monitoring systems

- Audit trails for decision-making

- Intervention protocols for problematic behavior

4. Systemic Impacts: Understanding the Broader Implications

The introduction of multiple AI agents into complex systems creates new challenges for governance :

- Agent Interaction Dynamics

When multiple AI agents operate in the same environment, their interactions can create unexpected behaviors. For example, trading agents might develop unforeseen strategies that could impact market stability.

- Emergent Behaviors

The collective behavior of multiple AI agents might produce effects that are difficult to predict or control. Consider how multiple AI agents managing different aspects of urban infrastructure might interact in ways that create unexpected challenges.

- System Stability

Maintaining stability in systems where multiple AI agents operate requires:

- Robust coordination mechanisms

- Conflict resolution protocols

- System-wide monitoring and control

- Fail-safes for unexpected behaviors

What´s important for Moving Forward: A Framework for Responsible AI Agentic

To harness the benefits of AI agents while mitigating risks, we need a comprehensive governance framework that addresses:

The Roles of the Stakeholders :

- Policymakers

- Create regulatory frameworks: Establishing laws and regulations for AI agent development and deployment

- Define compliance requirements: Setting standards for safety, transparency, and accountability

- Establish oversight mechanisms: Creating bodies and processes to monitor AI agent operations

- Technologists

- Develop transparent decision-making systems: Building AI agents with explainable actions and logic

- Implement safety protocols: Creating robust testing environments and fail-safes

- Create auditing tools: Developing systems to track and verify AI agent behaviors

- Design kill switches: Implementing emergency shutdown capabilities for problematic AI agents

- Organizations

- Establish ethical guidelines: Creating clear principles for responsible AI agent deployment

- Deploy responsible AI practices: Implementing risk assessment and management procedures

- Maintain compliance standards: Ensuring ongoing adherence to regulations and best practices

- Train personnel: Educating staff on AI agent management and oversight

- Researchers

- Study systemic impacts: Analyzing how AI agents affect various sectors and systems

- Evaluate safety measures: Testing and improving security protocols

- Develop new governance models: Creating innovative frameworks for AI agent control

- Assess emerging risks: Identifying potential future challenges and solutions

A Possible Framework for AI Agentic Governance

AI Agentic and AI agents are coming into our lives. Whether you're a developer, business leader, or policymaker, your involvement in these discussions is crucial. Start by:

- Educating yourself about AI agent capabilities and limitations

- Participating in discussions about AI governance

- Advocating for responsible AI development in your organization

and make sure your company has and follows AI Governance frameworks.

Want to learn more about AI governance ? Visit our blog where we discuss news and updates on how AI can be implemented responsibly and human centered.